Red Hat OpenShift Dual-Region

This guide is designed to assist users aiming to deploy Camunda 8 in a dual-region setup on Red Hat OpenShift.

The primary goal is to configure and integrate two OpenShift clusters for use in the dual-region reference architecture. This setup leverages specific components to address key challenges, and users are encouraged to exercise discretion regarding their use, learn more about Advanced Cluster Management. While this guide does not cover exhaustive configurations, it aims to provide the key steps needed to achieve the desired outcome.

To enable intercommunication between regions, we will utilize ACM Advanced Cluster Management and Submariner. Failover will be managed through DNS configurations, while access to the platform will be ensured via OpenShift Routes.

Additionally, depending on whether your OpenShift clusters are managed on a cloud provider or deployed on-premises, certain aspects of the configuration might require adaptation.

Please review our dual-region concept documentation to understand the limitations and constraints of this blueprint before proceeding.

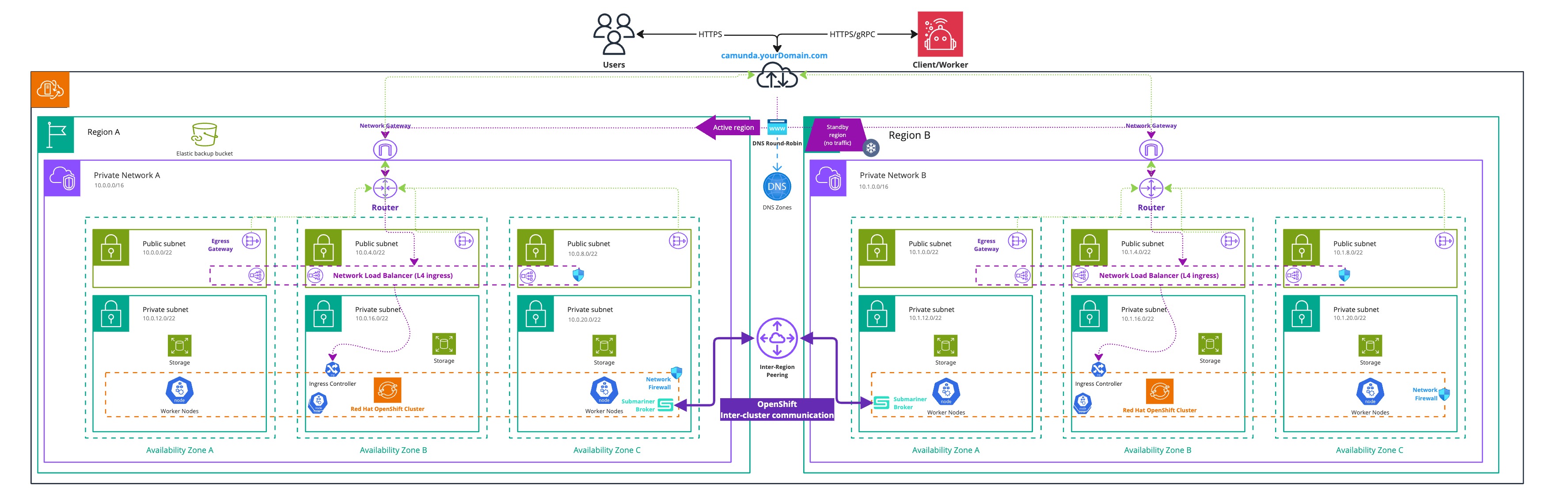

High Level Design

Infrastructure diagram for a OpenShift dual-region setup (click on the image to open the PDF version)

This High-Level Design describes how the following critical components interact to achieve a Camunda 8 deployment across two regions:

- An S3-compatible solution is used for taking snapshots of the Elasticsearch database.

- A DNS entry with an associated domain is used to enable Camunda 8 failover and reroute traffic from one cluster to the other.

- Firewall and networking components are configured to allow unrestricted communication between the two clusters.

- Local storage is provided on each OpenShift cluster for persistent data requirements.

- A non-overlapping network configuration is implemented on the OpenShift clusters. This is a mandatory requirement, as outlined in the Submariner overlapping guide.

- Red Hat OpenShift Advanced Cluster Management is used to manage the two clusters and configure Submariner.

- Submariner is configured on the two clusters to enable cross-namespace and cross-cluster network communication.

Requirements

- You need access to the Advanced Cluster Management operator and the Submariner operator.

- The clusters must be separated by a reasonable latency as outlined in the installation environment guidelines.

- Each of your OpenShift clusters must meet at least the minimum capacity requirements for a cluster. Refer to the cluster specification guide for details on resource allocation and infrastructure needs.

- Administrative privileges are required for both clusters to perform configurations and operator deployments.

- A reliable means of communication between the two clusters is necessary. Ensure that each cluster can establish network connections with the other.

- The version of your OpenShift clusters must be included in the supported versions list.

- Review the requirements of OpenShift Advanced Cluster Management if it's not already configured.

- Review the requirements of OpenShift Submariner if it's not already configured.

- Review the requirements of Submariner if it's not already configured, especially the flows to open.

CLI Requirements

In addition to the general prerequisites outlined above, the following CLI tools are required for interacting with the clusters and deploying Camunda 8, these are the same CLI tools required as mentioned in the OpenShift Guide:

- subctl a CLI tool used to interact with Submariner.

OpenShift clusters

The architecture of your OpenShift clusters may vary depending on your specific configuration. This guide assumes a generic deployment on OpenShift. If you are interested in a tested topology, follow the ROSA Dual-Region Guide.

Cluster requirements

To deploy infrastructure components successfully, it’s essential that the OpenShift clusters you use have a cluster-admin role, which grants full privileges within the cluster. This level of access is necessary for the proper setup and configuration of the infrastructure components.

However, Camunda 8 deployment does not require cluster-admin access. For the best security practice and to follow the principle of least privilege, it is recommended to deploy Camunda 8 with a standard, limited user. This approach helps minimize security risks by restricting unnecessary administrative privileges.

Setup Advanced Cluster Management and Submariner

In order to achieve cross-cluster communication, we use the recommanded Submariner solution that is designed to securely connect workloads across multiple Kubernetes clusters.

The installation of Submariner on OpenShift differs from the standard installation as it requires the usage of Red Hat Advanced Cluster Management for Kubernetes.

You may achieve the same installation without the usage of Red Hat Advanced Cluster Management for Kubernetes, however, this guide will focus on the official supported deployment of Submariner.

Advanced Cluster Management

If Red Hat Advanced Cluster Management is not enabled on your cluster, you need to enable it. The following steps are an extract from the official instructions, which you may want to refer for details of the implementation and associated customizations.

The following installation instructions for Advanced Cluster Management are intended for non-production environments.

For a production setup, please consult the official Red Hat Advanced Cluster Management guide.

The cluster of the region 1 is referred to as local-cluster in ACM. This designation cannot be changed, as it is a constant name used to reference the managed hub cluster.

Later in this guide, we will refer to it as first cluster.

-

This part of the guide uses a generic reference architecture, you can find all the acm files here.

-

Reference each cluster context name and ensure that each cluster's context name matches the corresponding cluster name. If the context name does not match, you will need to rename it to follow this guide.

/generic/openshift/dual-region/procedure/set-cluster-names.shloading... -

The following manifest will create a namespace for the management cluster, enable the open-cluster-management operator and the associated subscription.

generic/openshift/dual-region/procedure/acm/install-manifest.ymlloading...Save this manifest as

install-manifest.ymlthen apply it to enable ACM:generic/openshift/dual-region/procedure/acm/install-acm.shloading...Verify that the installation succeeded:

generic/openshift/dual-region/procedure/acm/verify-acm.shloading... -

With the ACM operator now enabled on the first cluster, the next step is to create the Multicluster Global Hub. This feature allows you to import and manage one or more hub clusters from a single central hub cluster. In this setup, the first cluster will act as the central hub, managing the second cluster. This capability enables the deployment and management of components on the second cluster directly from the first cluster.

generic/openshift/dual-region/procedure/acm/multi-cluster-hub.ymlloading...Known issue: may not work correctly with the manifestThe creation of the MultiClusterHub using the manifest can sometimes remain stuck in the installation phase when created this way.

To avoid this issue, you can follow the official instructions in the OpenShift UI Console.

Save this manifest as

multi-cluster-hub.ymlthen apply it to enable ACM:generic/openshift/dual-region/procedure/acm/install-multi-cluster-hub.shloading...Wait until the status shows as "Running." This process can take up to 10 minutes:

generic/openshift/dual-region/procedure/acm/verify-multi-cluster-hub.shloading...Security consideration-

A ServiceAccount with a ClusterRoleBinding automatically gives cluster administrator privileges to Red Hat Advanced Cluster Management and to any user credentials with access to the namespace where you install Red Hat Advanced Cluster Management (

open-cluster-managementhere), learn more about this on the official documentation. -

A namespace called

local-clusteris reserved for the Red Hat Advanced Cluster Management hub cluster when it is self-managed. This is the only local-cluster namespace that can exist. -

⚠️ For security reasons, do not give access to the

local-clusternamespace to any user that is not a cluster-administrator.

-

-

With the MultiClusterHub created, the last step is to create a

ManagedClusterSetwhich is a group of managed clusters. With aManagedClusterSet, you can manage access to all of the managed clusters in the group togethergeneric/openshift/dual-region/procedure/acm/managed-cluster-set.ymlloading...Save this manifest as

managed-cluster-set.ymlthen apply it to enable ACM:generic/openshift/dual-region/procedure/acm/install-managed-cluster-set.shloading...Verify that the ManagedClusterSet has been created, at this step, only

local-clusterwill be listed:generic/openshift/dual-region/procedure/acm/verify-managed-cluster-set.shloading... -

After creating the Managed Cluster Set, the next step is to import clusters into the set.

-

To import a cluster, you need to template the manifest for each cluster.

Save the following file as

managed-cluster.yml.tpl:generic/openshift/dual-region/procedure/acm/managed-cluster.yml.tplloading... -

The cluster’s associated addon configuration will be managed using the following manifest.

Save it as

klusterlet-config.yml.tpl:generic/openshift/dual-region/procedure/acm/klusterlet-config.yml.tplloading... -

To import a cluster, you must store the associated authentication token.

Save the following file as

auto-import-cluster-secret.yml.tpl:generic/openshift/dual-region/procedure/acm/auto-import-cluster-secret.yml.tplloading... -

Finally, import each cluster into the Managed Cluster Set and verify that they can be reached and managed successfully:

generic/openshift/dual-region/procedure/acm/initiate-cluster-set.shloading... -

Once all the clusters are imported, verify that all of them are available and reachable:

generic/openshift/dual-region/procedure/acm/verify-managed-cluster-set.shloading...

-

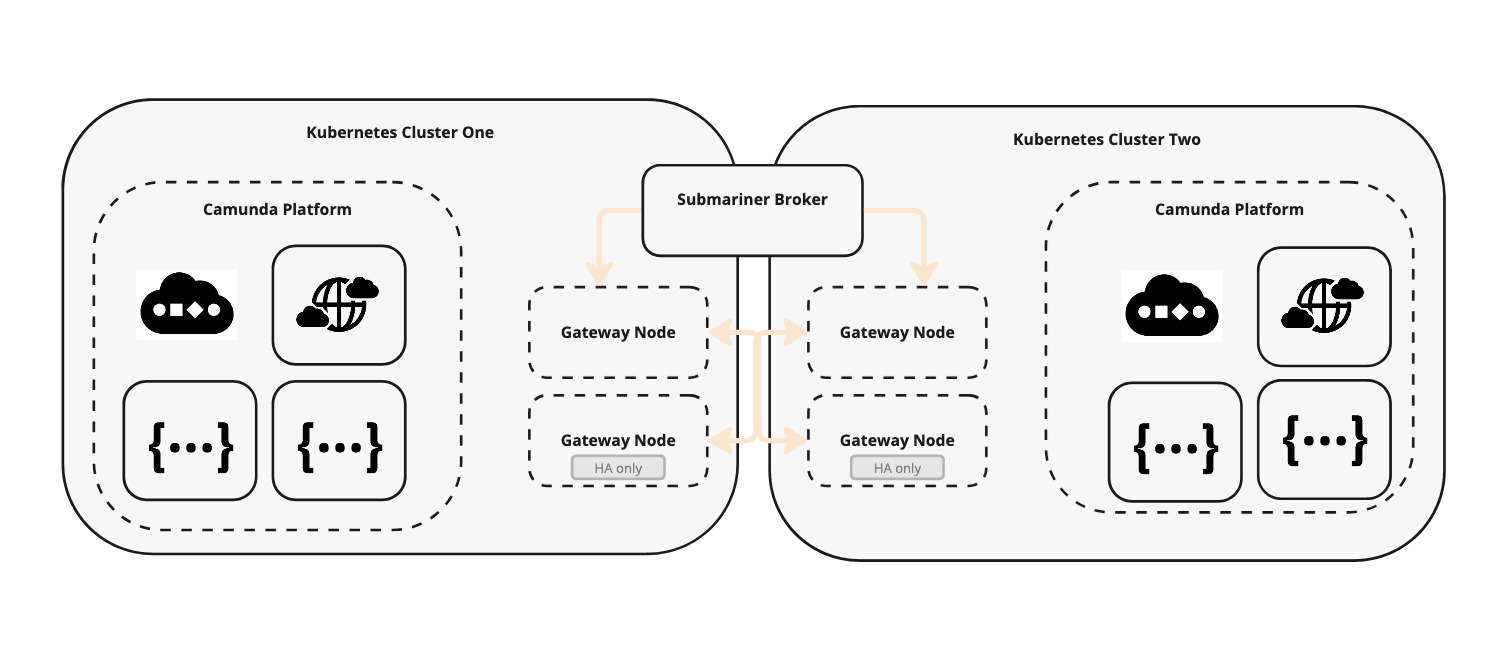

Submariner

The architecture of Submariner comprises several components working together to enable direct networking between Pods and Services across different Kubernetes clusters.

The following diagram illustrates the interaction between the two clusters:

Infrastructure diagram of Submariner setup

- Traffic sent from one broker to another cluster can be encrypted by the Gateway Engine. In OpenShift, the IPSec protocol is used on port

4500/UDP, utilizing the Libreswan implementation. - A dedicated node in each cluster assumes the Broker Role, facilitating the exchange of metadata between Gateway Engines in participating clusters. This component is not responsible for transmitting data, unlike the Gateway Engine, which handles data transmission between internal networks of different clusters. High availability can be achieved by adding a second dedicated node.

- Service discovery is managed internally by the Lighthouse project.

- The Route Agent component runs on every node in each participating cluster. It sets up the necessary host network elements on top of the existing Kubernetes CNI plugin.

This guide does not cover handling overlapping CIDRs. However, this can be achieved using the Globalnet Controller.

Installing Submariner in OpenShift requires Advanced Cluster Management to be configured, with each cluster added to the management cluster.

-

This part of the guide uses a generic reference architecture, you can find all the submariner files here.

-

Ensure cluster context names match: (Skip this step if already completed as part of Advanced Cluster Management.)

Verify that each cluster's context name matches its corresponding cluster name. If the context name does not match, rename it to align with this guide./generic/openshift/dual-region/procedure/set-cluster-names.shloading... -

Verify dedicated broker nodes: Confirm that each cluster has nodes labeled for Submariner gateway functionality:

/generic/openshift/dual-region/procedure/submariner/list-nodes-brokers.shloading...If no nodes are labeled, you need to label at least one node in each cluster. For better reliability, consider dedicating a node as the broker.

Assigning broker node labels:

Select the first node and apply the required label:/generic/openshift/dual-region/procedure/submariner/label-nodes-brokers.shloading... -

Deployment of Submariner on the clusters:

-

Save the following file as

submariner.yml.tpl:generic/openshift/dual-region/procedure/submariner/submariner.yml.tplloading...Cluster namingIn this example, the first cluster is referenced as

local-cluster. This is because the first cluster is used as the management cluster in this minimal setup.If your cluster is named differently, you may need to adapt this file to match your actual cluster name.

-

Then apply it on the management cluster:

/generic/openshift/dual-region/procedure/submariner/install-submariner.shloading... -

Wait for the brokers to become ready. This may take up to 10 minutes. You can check the broker status using the following command:

/generic/openshift/dual-region/procedure/submariner/verify-submariner.shloading...

-

-

After deploying Submariner, check that the clusters can communicate with each other by using the

subctlutility. Keep in mind that it might take several minutes before all status indicators turn green.If you don’t have the

subctlCLI installed, you can follow the installation instructions here or execute the following commands:/generic/openshift/dual-region/procedure/submariner/install-subctl.shloading...Now, verify communication between the clusters with the following script:

/generic/openshift/dual-region/procedure/submariner/verify-subctl.shloading...If everything is set up correctly, you should observe in the output of each cluster context the following statuses:

- Gateway's status:

All connections (1) are established - Connection's status:

connected 10.406614ms (RTT)

Example Submariner check successfull output

generic/openshift/dual-region/procedure/submariner/output.txtloading... - Gateway's status:

For more comprehensive details regarding the verification tests for Submariner using subctl, please refer to the official documentation.

Debugging the Submariner setup:

If you are experiencing connectivity issues, we recommend spawning a pod in the default namespace that contains networking debugging tools. You can find an example here.

With this pod, you will be able to check flow openings, service resolution, and other network-related aspects.

Troubleshooting requires examining all the underlying mechanisms of Submariner. Therefore, we also encourage you to read the Submariner troubleshooting guide.

Deploying Camunda 8 via Helm charts in a dual-region setup

The installation of Camunda 8 in OpenShift across dual regions requires a functioning Submariner setup connecting two OpenShift clusters.

Verify the pre-requisites

Before proceeding with the installation, ensure the required information is available and configured in your terminal for later use. Review and adjust the following environment script to match your specific configuration:

loading...

If you are unsure about the values of the backup bucket, please refer to the S3 backup bucket module setup as a reference for implementation.

Save the file as chart-env.sh, replace the placeholders with your values, and then source the file:

source chart-env.sh

Reference the elastic backup bucket in the clusters

Elasticsearch will need an S3 bucket for data backup and restore procedure, required during a regional failback. For this, you will need to configure a Kubernetes secret to not expose those in cleartext.

If you don’t have access to an S3 bucket, you can adapt the backup method to use an alternative Elasticsearch backup solution. However, this guide focuses solely on S3 snapshots.

The Elasticsearch backup bucket is tied to a specific region. If that region becomes unavailable and you want to restore to a different region or S3 services remain disrupted, you must create a new bucket in another region and reconfigure your Elasticsearch cluster to use the new bucket.

The following script will create the required namespaces and secrets used to reference the bucket access.

loading...

Save it as setup-namespaces-secrets.sh and execute it:

chmod +x setup-namespaces-secrets.sh

./setup-namespaces-secrets.sh

Configure your deployment for each region

Before deploying, some values in the value files need to be updated. To assist with generating these values, save the following Bash script as generate-zeebe-helm-values.sh:

loading...

Then, source the output of the script. By doing so, we can reuse the values later for substitution, instead of manually adjusting the values files. You will be prompted to specify the number of Zeebe brokers (total number of Zeebe brokers in both Kubernetes clusters), for a dual-region setup we recommend 8, resulting in four brokers per region:

chmod +x generate-zeebe-helm-values.sh

CLUSTER_1_NAME='local-cluster' source ./generate-zeebe-helm-values.sh

For those unfamiliar with the Submariner DNS convention, please consult the official documentation.

In this deployment, we are utilizing the service discovery to have Elasticsearch and Zeebe brokers accessible from one cluster to another. Consequently, the values generated by the previous script will not be local services but use svc.clusterset.local.

Make sure that the variable CLUSTER_1_NAME is set to the name of your first cluster. In this example, the value local-cluster is used to maintain consistency with the previous Advanced Cluster Management step.

Helm values

Create values-region-1.yml and values-region-2.yml files to store each region's configuration. These files will contain key-value pairs that will be substituted using envsubst.

Throughout this guide, you will add and merge values into these files to configure your deployment according to your requirements.

- Save the following file as both

values-region-1.ymlandvalues-region-2.ymlto serve as the base configuration:generic/openshift/dual-region/helm-values/values-base.ymlloading...

This guide references multiple configuration files that need to be merged into a single YAML file. Be cautious to avoid duplicate keys when merging the files.

Additionally, pay close attention when copying and pasting YAML content. Ensure that the separator notation --- does not inadvertently split the configuration into multiple documents.

We strongly recommend double-checking your YAML file before applying it. You can use tools like yamllint.com or the YAML Lint CLI if you prefer not to share your information online.

Set up the region ID using a unique integer for each region:

-

Add the following YAML configuration to your

values-region-1.yml:generic/openshift/dual-region/helm-values/values-region-1.ymlloading... -

Add the following YAML configuration to your

values-region-2.yml:generic/openshift/dual-region/helm-values/values-region-2.ymlloading...

Security Context Constraints (SCCs)

The process of deploying applications in an OpenShift cluster can be influenced by its Security Context Constraints (SCCs) configuration. By default, OpenShift comes with more restrictive SCCs. For the purposes of this guide, which focuses on multi-region deployment, we assume this to be the standard setup.

For custom configurations or specific requirements, please refer to the installation guide for OpenShift which details the various available SCC options.

Fill your deployment with actual values

Once you've prepared each region's value file (values-region-1.yml and values-region-2.yml) file, run the following envsubst command to substitute the environment variables with their actual values:

loading...

Install Camunda 8 using Helm

With the value files for each region configured, you can now install Camunda 8 using Helm. Execute the following commands:

loading...

This command:

- Installs (or upgrades) the Camunda platform using the Helm chart on each cluster.

- Substitutes the appropriate version using the

$CAMUNDA_HELM_CHART_VERSIONenvironment variable. - Applies the configuration from the value file.

⚠️ Installation is not complete yet: at this stage, the two Camunda 8 deployments cannot communicate. You need to follow the next step to complete the installation.

This guide uses helm upgrade --install as it runs install on initial deployment and upgrades future usage. This may make it easier for future Camunda 8 Helm upgrades or any other component upgrades.

Export Camunda 8 services using Submariner

Once Camunda is deployed across the two clusters, the next step is to expose each service to Submariner so it can be resolved by the other cluster:

loading...

Alternatively, you can manage each service individually using the ServiceExport Custom Resource Definition (CRD).

Example of the ServiceExport manifest

apiVersion: multicluster.x-k8s.io/v1alpha1

kind: ServiceExport

metadata:

name: camunda-elasticsearch # name of the service to export

namespace: camunda-cluster-region-2 #

For each cluster, verify the status of the exported services with this script:

loading...

To monitor the progress of the installation, save and execute the following script:

loading...

Save it as check-deployment-ready.sh, make it executable, and run it:

chmod +x check-deployment-ready.sh

./check-deployment-ready.sh

Verify connectivity to Camunda 8

The following script will port-forward the Zeebe Gateway via kubectl from one of your clusters. Zeebe is stretching over both clusters and is active-active, meaning it doesn't matter which Zeebe Gateway to use to interact with your Zeebe cluster.

loading...

Make sure that your output contains all eight brokers from the two regions:

Example output

loading...

Failover

Consult the generic dual-region failover procedure.

Pitfalls to avoid

For general deployment pitfalls, visit the deployment troubleshooting guide.