Build your first AI agent

Get started with Camunda agentic orchestration by building and running your first AI agent.

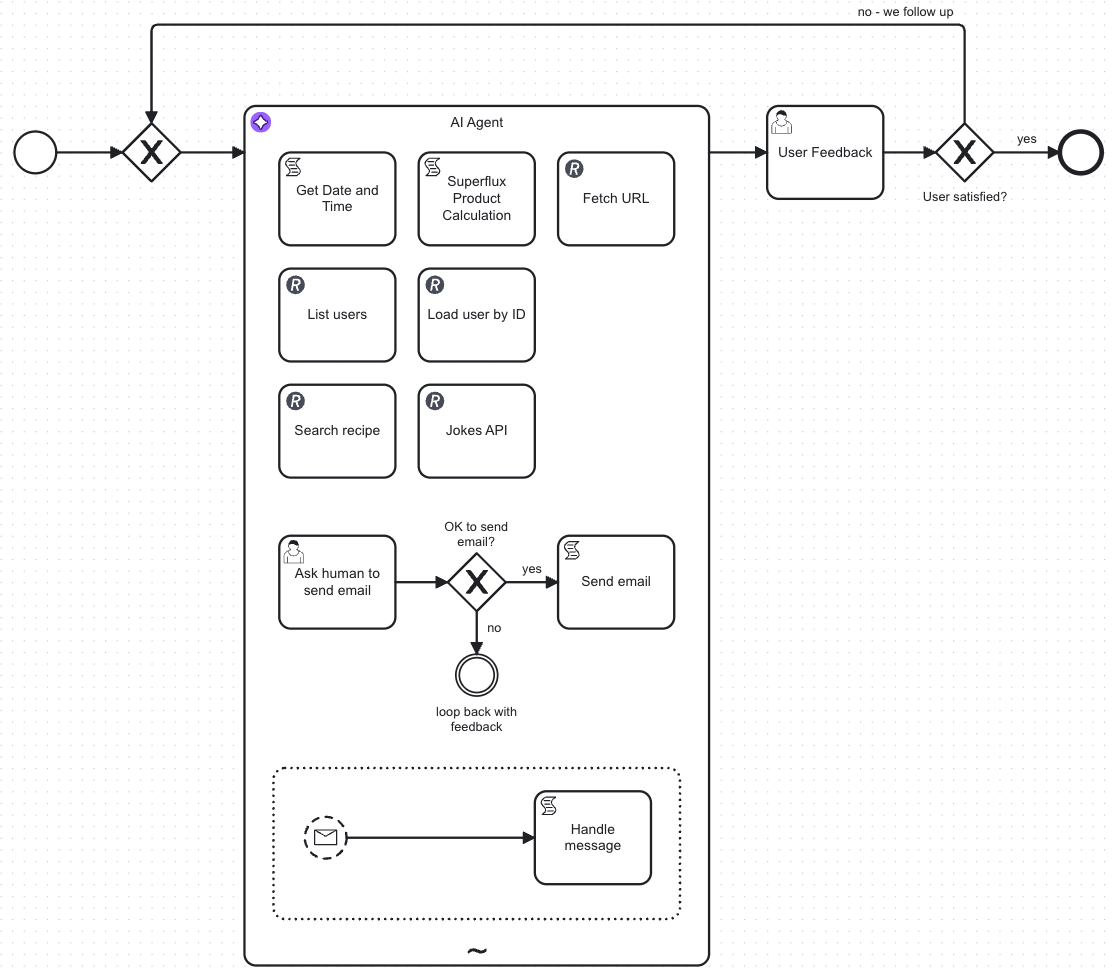

In Camunda, an AI agent refers to an automation solution that uses ad-hoc sub-processes to perform tasks with non-deterministic behavior.

AI agents represent the practical implementation of agentic process orchestration within Camunda, combining the flexibility of AI with the reliability of traditional process automation.

About this guide

In this guide, you will:

- Run Camunda 8 using Camunda 8 SaaS or locally with Camunda 8 Self-Managed.

- Deploy and start a business process using Web Modeler or locally with Desktop Modeler.

- Use an AI Agent connector to provide interaction and reasoning capabilities for the AI agent.

- Use an ad-hoc sub-process to define the tools the AI agent should use.

- Integrate a Large Language Model (LLM) into your AI agent.

Once you have completed this guide, you will have an example AI agent running within Camunda 8.

Prerequisites

To build your first AI agent, see the prerequisites below depending on:

- Your working environment.

- Your chosen model.

Camunda 8 environment

To run your agent, you must have Camunda 8 (version 8.8 or newer) running, using either:

- Camunda 8 SaaS. For example, sign up for a free SaaS trial account.

- Camunda 8 Self-Managed. For example, see Run your first local project.

Supported models

The AI Agent connector makes it easy to integrate LLMs into your process workflows. It supports multiple model providers and can communicate with any LLM that exposes an OpenAI‑compatible API.

In this guide, you can try two use cases:

| Setup | Model provider | Model used | Prerequisites |

|---|---|---|---|

| Cloud | AWS Bedrock | Claude Sonnet 4 |

|

| Local | Ollama | GPT-OSS:20b |

|

Running LLMs locally requires substantial disk space and memory. GPT-OSS:20b requires more than 20GB of RAM to function and 14GB of free disk space to download.

You can use a different LLM provider instead, such as OpenAI or Anthropic. For more information on how to configure the connector with your preferred LLM provider, see AI Agent connector.

Step 1: Install the example model blueprint

To start building your first AI agent, you can use a prebuilt Camunda blueprint process model.

In this tutorial, you will use the AI Agent Chat Quick Start blueprint from Camunda marketplace. Depending on your Camunda 8 working environment, follow the corresponding steps below.

- SaaS

- Self-Managed

- In the blueprint page, click For SAAS and select or create a project to save the blueprint.

- The blueprint BPMN diagram opens in Web Modeler.

- In the blueprint page, click For SM and download the blueprint files from the repository.

- Open the blueprint BPMN diagram in Desktop Modeler or in Web Modeler.

About the example AI agent process

The example AI agent process is a chatbot that you can interact with via a user task form.

The process showcases how an AI agent can:

- Make autonomous decisions about which tasks to execute based on your input.

- Adapt its behavior dynamically using the context provided.

- Handle complex scenarios by selecting and combining different tools.

- Integrate seamlessly with other process components.

The example includes a form linked to the start event, allowing you to submit requests ranging from simple questions to more complex tasks, such as document uploads.

Step 2: Configure the AI Agent connector

Depending on your model choice, configure the AI Agent connector accordingly.

- AWS Bedrock

- Ollama

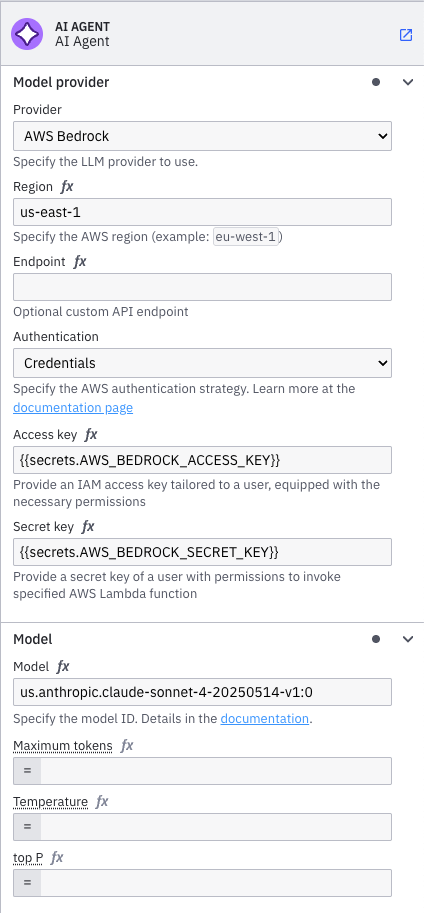

Configure the connector's authentication and template for AWS Bedrock.

Configure authentication

The example blueprint downloaded in step one is preconfigured to use AWS Bedrock. For authentication, it uses the following connector secrets:

AWS_BEDROCK_ACCESS_KEY: The AWS Access Key ID for your AWS account able to call the Bedrock Converse API.AWS_BEDROCK_SECRET_KEY: The AWS Secret Access Key for your AWS account.

You will configure these secrets differently depending on your working environment.

- SaaS

- Self-Managed

Configure the secrets using the Console.

Export the secrets as environment variables before starting the distribution. If you use Camunda 8 Run with Docker, add the secrets in the connector-secrets.txt file.

See Amazon Bedrock model provider for more information about other available authentication methods.

Configure properties

In the blueprint BPMN diagram, the AI Agent connector template is applied to the AI Agent service task.

You can leave it as is or adjust its configuration to test other setups. To do so, use the properties panel of the AI Agent:

Configure your local LLM with Ollama.

Set up Ollama

- Download and install: Follow Ollama's documentation for details.

- Confirm installation: Start the application and check the running version in a terminal or command prompt with the command

ollama --version. - Pull the GPT-OSS:20b model: From the same terminal or command prompt, run

ollama pull gpt-oss:20b. - Start the local server: Run

ollama serve. - Test: Ollama serves an API by default at

http://localhost:11434. To test it, use a tool like Postman or run this command from your terminal:

curl -X POST http://localhost:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{"model":"gpt-oss:20b","messages":[{"role":"user","content":"Hello!"}]}'

Configure properties

The example blueprint downloaded in step one is preconfigured to use AWS Bedrock. Update the connector's configuration as follows to use Ollama instead.

Configure Camunda to point to your local Ollama API, which serves the GPT-OSS:20b LLM, using the Model provider and Model sections within the connector's properties panel.

Model provider

- Select OpenAI Compatible from the Provider dropdown.

- The default Ollama API is served at

http://localhost:11434/v1, so enter this value in the API endpoint field. - No authentication or additional headers are required for the local Ollama API, so leave the remaining fields blank.

Model section

- Enter

gpt-oss:20bin the Model field. Note that this field is case-sensitive, so be sure to enter it in all lowercase.

When configuring connectors, use FEEL expressions, by clicking the fx icon, to reference process variables and create dynamic prompts based on runtime data.

For a reference of available configuration options, see AI Agent connector.

Step 3: Test your AI agent

You can now deploy and run your AI agent, and test it as a running process on your Camunda cluster running version 8.8 or higher.

Once you have started your process, you can then monitor the execution in Operate.

What to expect during execution

When you run the AI agent process:

- The AI agent receives your prompt and analyzes it.

- It determines which tools from the ad-hoc subprocess should be activated.

- Tasks can execute in parallel or sequentially, depending on the agent's decisions.

- Process variables are updated as each tool completes its execution.

- The agent may iterate through multiple tool calls to handle complex requests.

You can observe this dynamic behavior in real-time through Operate, where you'll see which tasks were activated and in what order.

- SaaS

- Self-Managed

In this example, you can quickly test the AI agent using the Play feature.

- Select the Play tab.

- Select the cluster you want to deploy and play the process on.

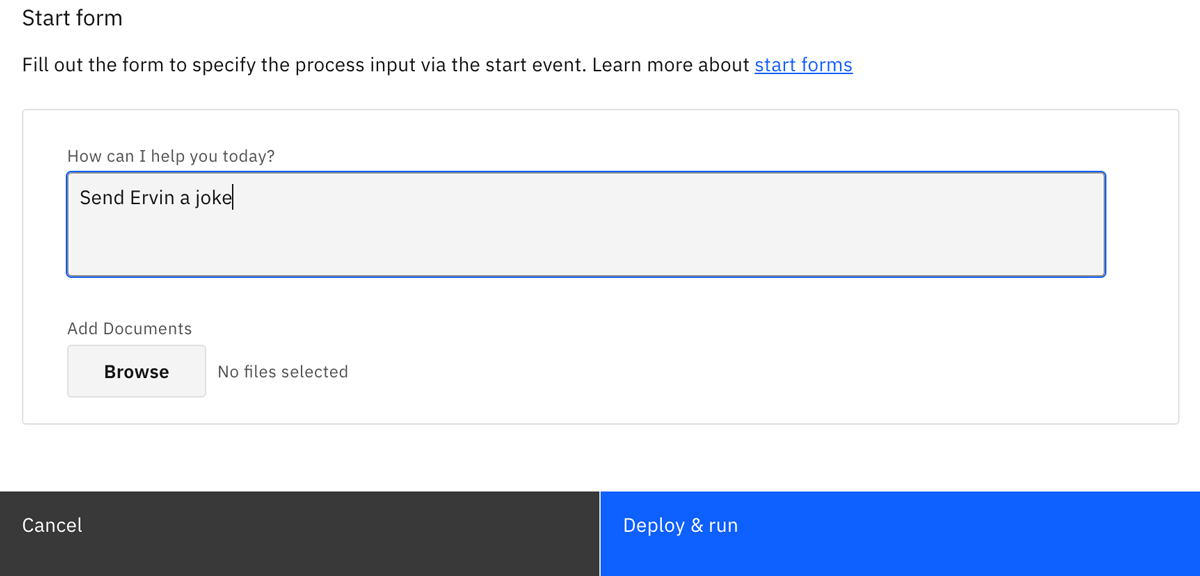

- Open the Start form and add a starting prompt for the AI agent. For example, enter "Tell me a joke" in the How can I help you today? field, and click Start instance.

- The AI agent analyzes your prompt, decides what tools to use, and responds with an answer. Open the Task form to view the result.

- You can follow up with more prompts to continue testing the AI agent. Select the Are you satisfied with the result? checkbox when you want to finish your testing and complete the process.

Instead of using Play, you can also test the process within the Implement tab using Deploy & Run, and use Tasklist to complete the form.

- Deploy the process model to your local Camunda 8 environment using Desktop Modeler.

- Open Tasklist in your browser. For example at http://localhost:8080/tasklist, depending on your environment.

- On the Processes tab, find the

AI Agent Chat With Toolsprocess and click Start process. - In the start form, add a starting prompt for the AI agent. For example, enter "Tell me a joke" in the How can I help you today? field, and click Start process.

- The AI agent analyzes your prompt, decides what tools to use, and responds with an answer.

- Select the Tasks tab in Tasklist. When the AI agent finishes processing, you should see either a

User Feedbackor aAsk human to send emailtask waiting for you to complete. - You can follow up with more prompts to continue testing the AI agent. Select the Are you satisfied with the result? checkbox when you want to finish the process.

Example prompts

The following example prompts are provided as guidance to help you test your AI agent.

| Prompt | Description |

|---|---|

| "Send Ervin a joke" | Showcases multiple tool call iterations. The AI agent fetches a list of users, finds the matching user, fetches a joke, and compiles an email to send to the user (Ervin) with the joke. For easier testing, it does not actually send an email, but uses a user task to instruct a "human operator" to handle sending the email. The operator can give feedback, such as "I can't send an email without emojis" or "include a Spanish translation". |

| "What is the superflux product of 3 and 10?" | Executes an imaginary superflux calculation, using the provided tool. |

| "Go and fetch <url> and tell me about it" | The AI agent fetches the specified URL and provides you with a summary of the content. After returning with a response, you can ask follow-up questions. |

| "Tell me about this document" | You can upload a document in the prompt form, and get the AI agent to provide you with a summary of the content. Note that this is limited to smaller documents by the Bedrock API. |

Next steps

Now that you’ve built your first Camunda AI agent, why not try customizing it further?

For example:

- Add and configure more tools in the ad-hoc sub-process that the AI agent can use.

- Change the provided system prompt to adjust the behavior of the AI agent.

- Experiment with different model providers and configurations in the AI Agent connector.

You can also:

- Learn more about Camunda agentic orchestration and the AI Agent connector.

- Read the Building Your First AI Agent in Camunda blog.

- Explore other AI blueprints from Camunda marketplace.

Register for the free Camunda 8 - Agentic Orchestration course to learn how to model, deploy, and manage AI agents in your end-to-end processes.